The Challenge Dataset 2022

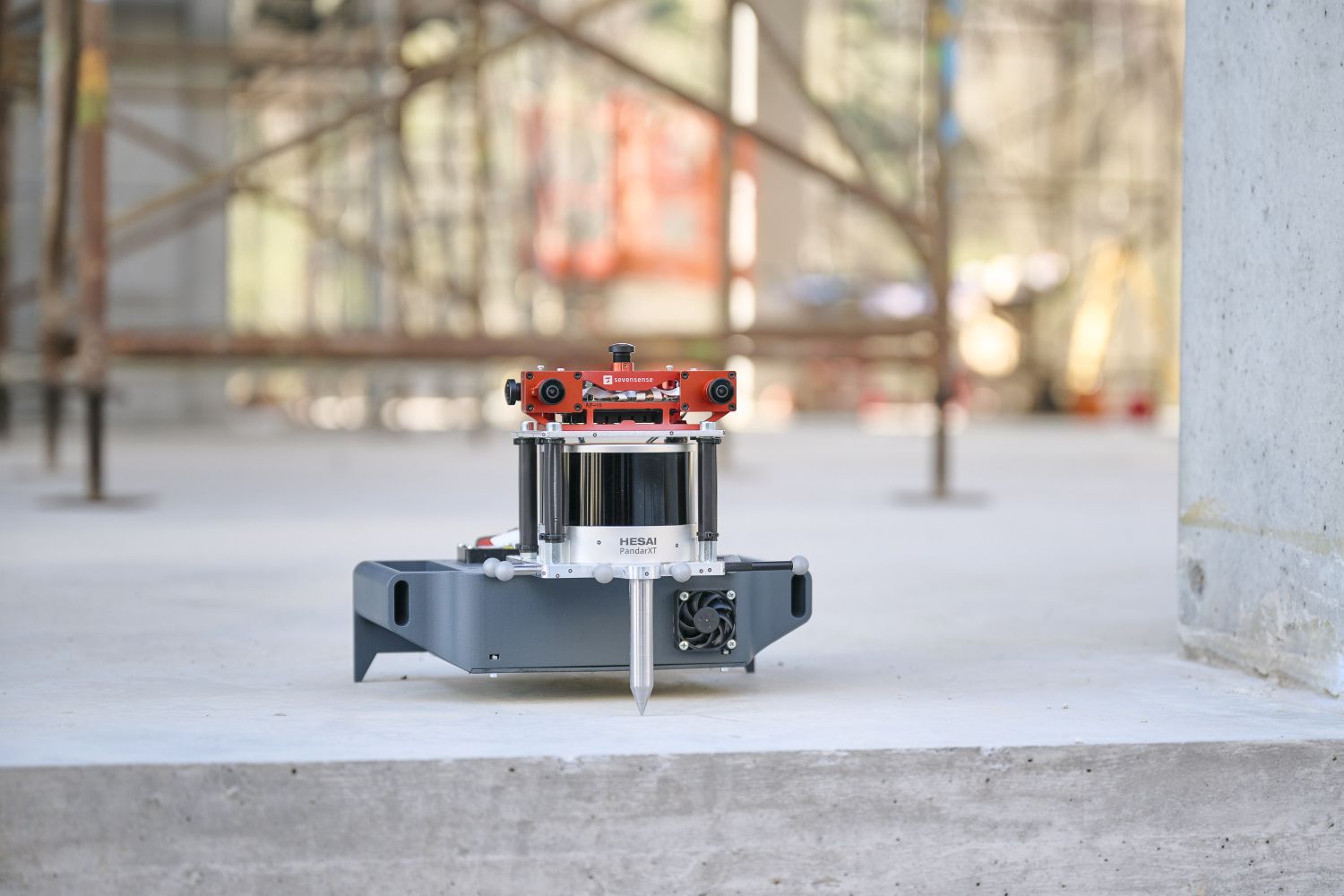

This benchmark is based on the HILTI-OXFORD Dataset, which has been collected on construction sites as well as on the famous Sheldonian Theatre in Oxford, providing a large range of difficult problems for SLAM.

All these sequences are characterized by featureless areas and varying illumination conditions that are typical in real-world scenarios and pose great challenges to SLAM algorithms that have been developed in confined lab environments. Accurate ground truth, at millimeter level, is provided for each sequence. The sensor platform used to record the data includes a number of visual, lidar, and inertial sensors, which are spatially and temporally calibrated.

Challenge Sequences

| Sequence | Scene | Top-Down View | Difficulty | Bag |

|---|---|---|---|---|

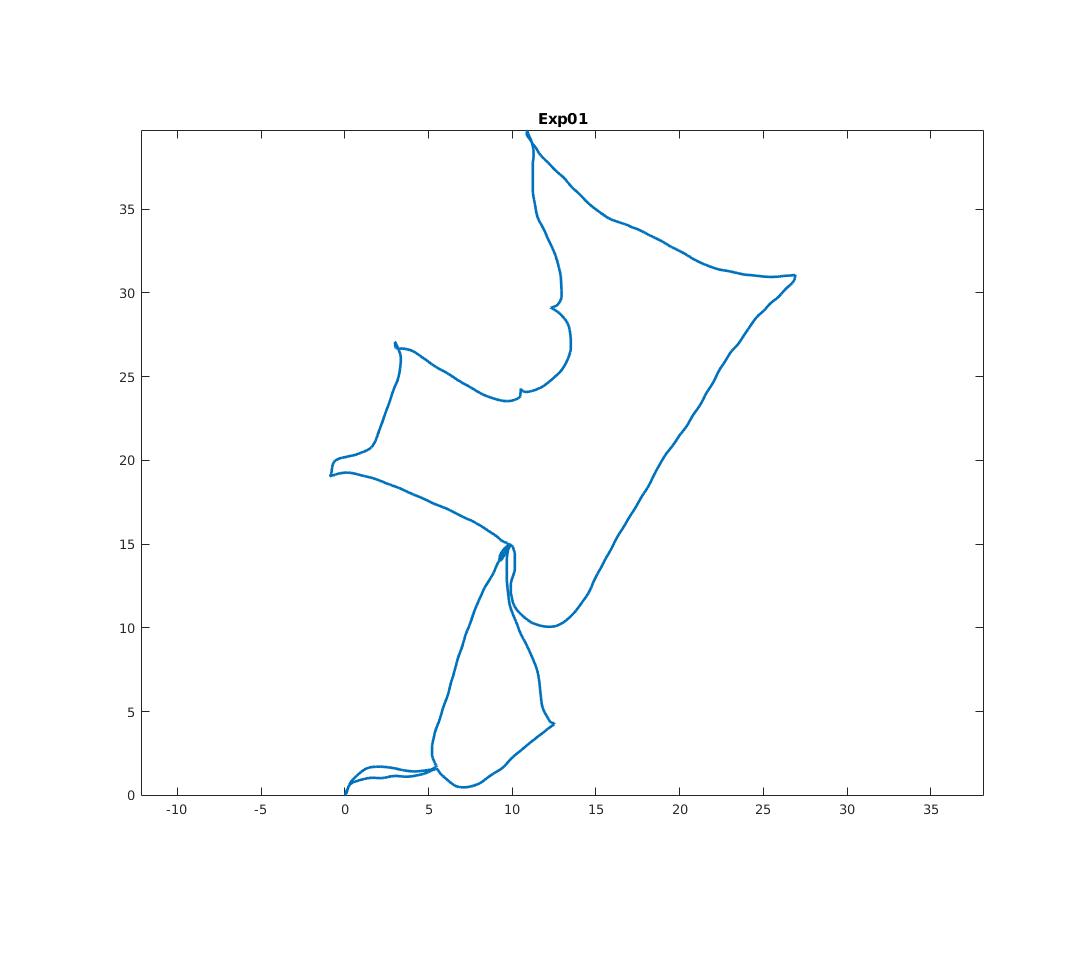

| Exp01 Construction Ground Level |

X

|

X

|

Easy | 18 GB |

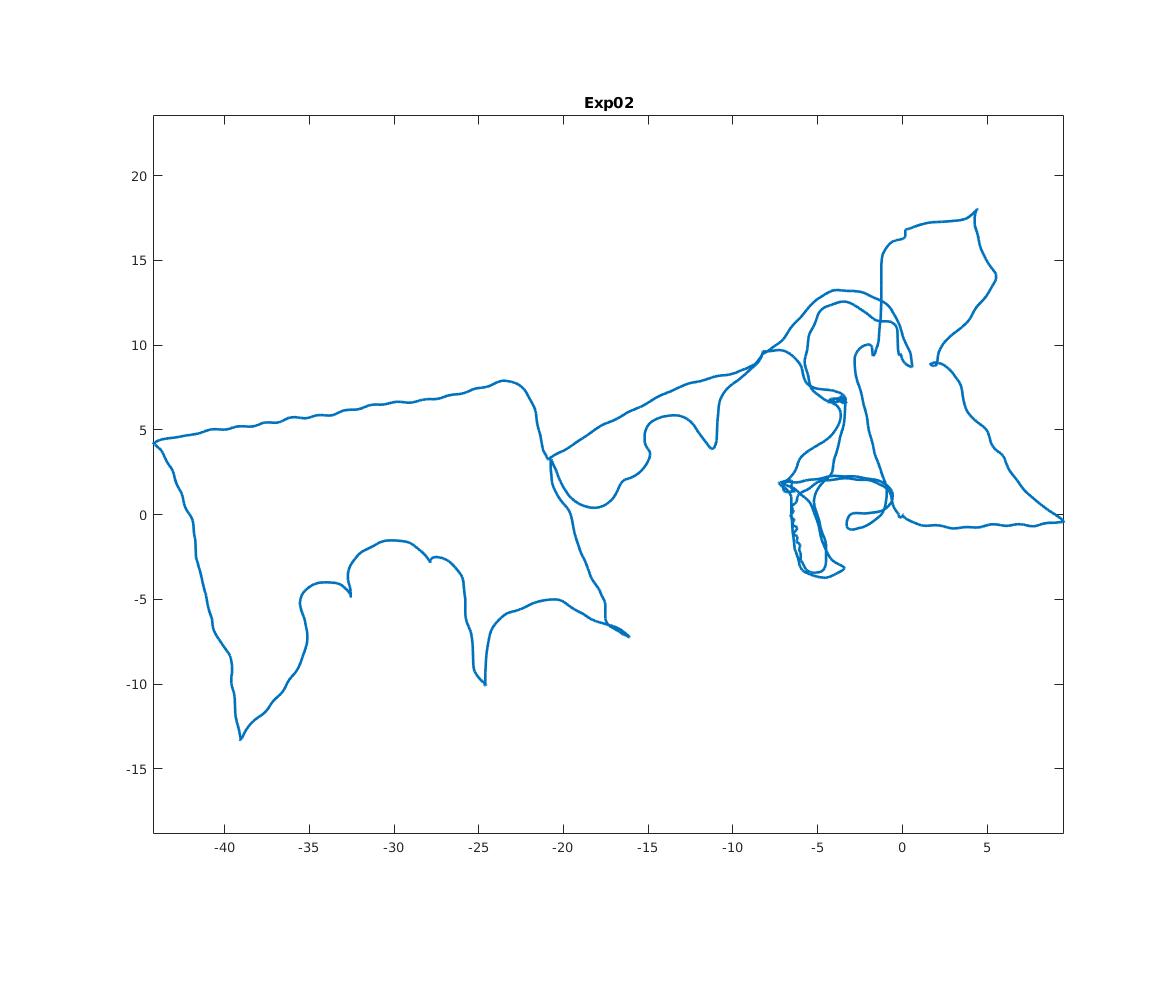

| Exp02 Construction Multilevel |

X

|

X

|

Medium | 34 GB |

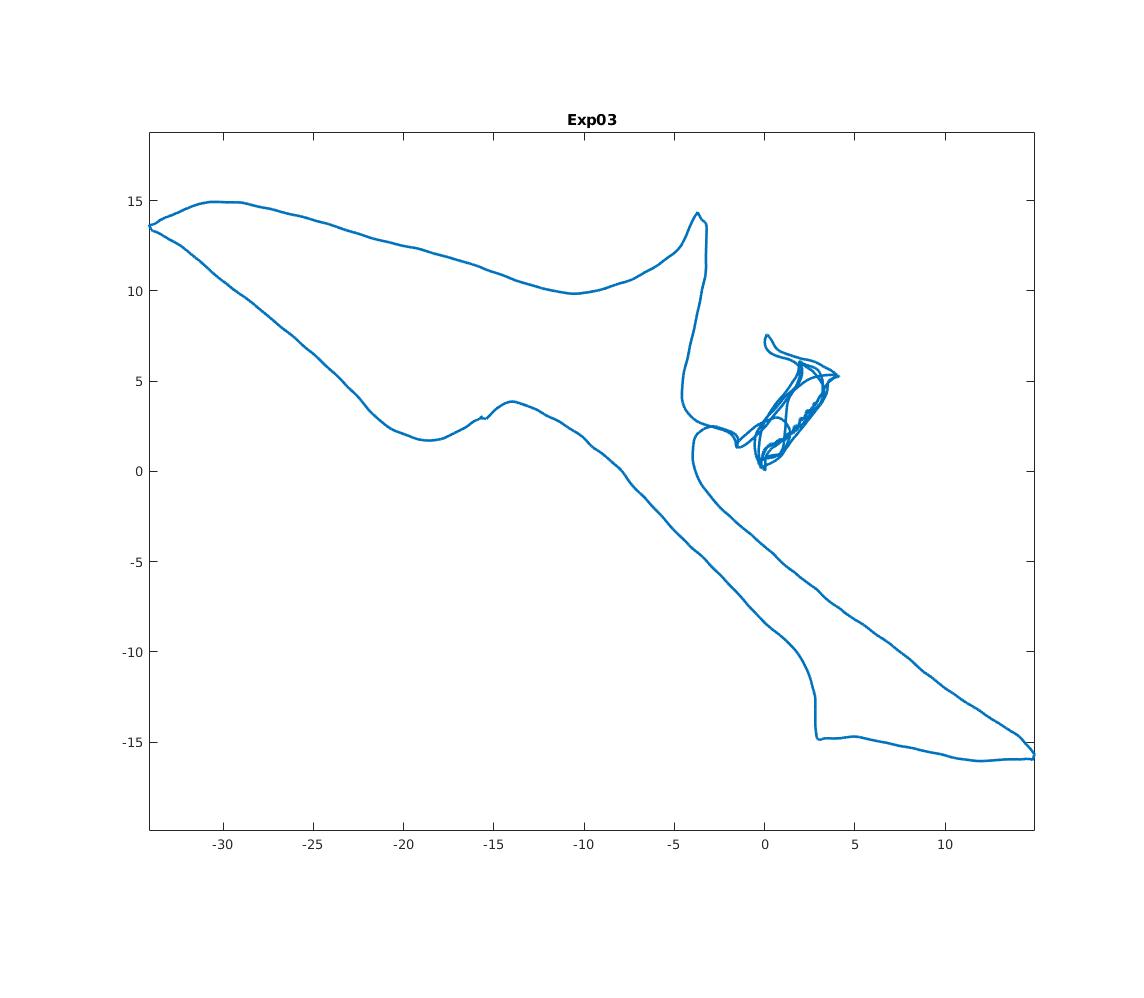

| Exp03 Construction Stairs |

X

|

X

|

Hard | 21 GB |

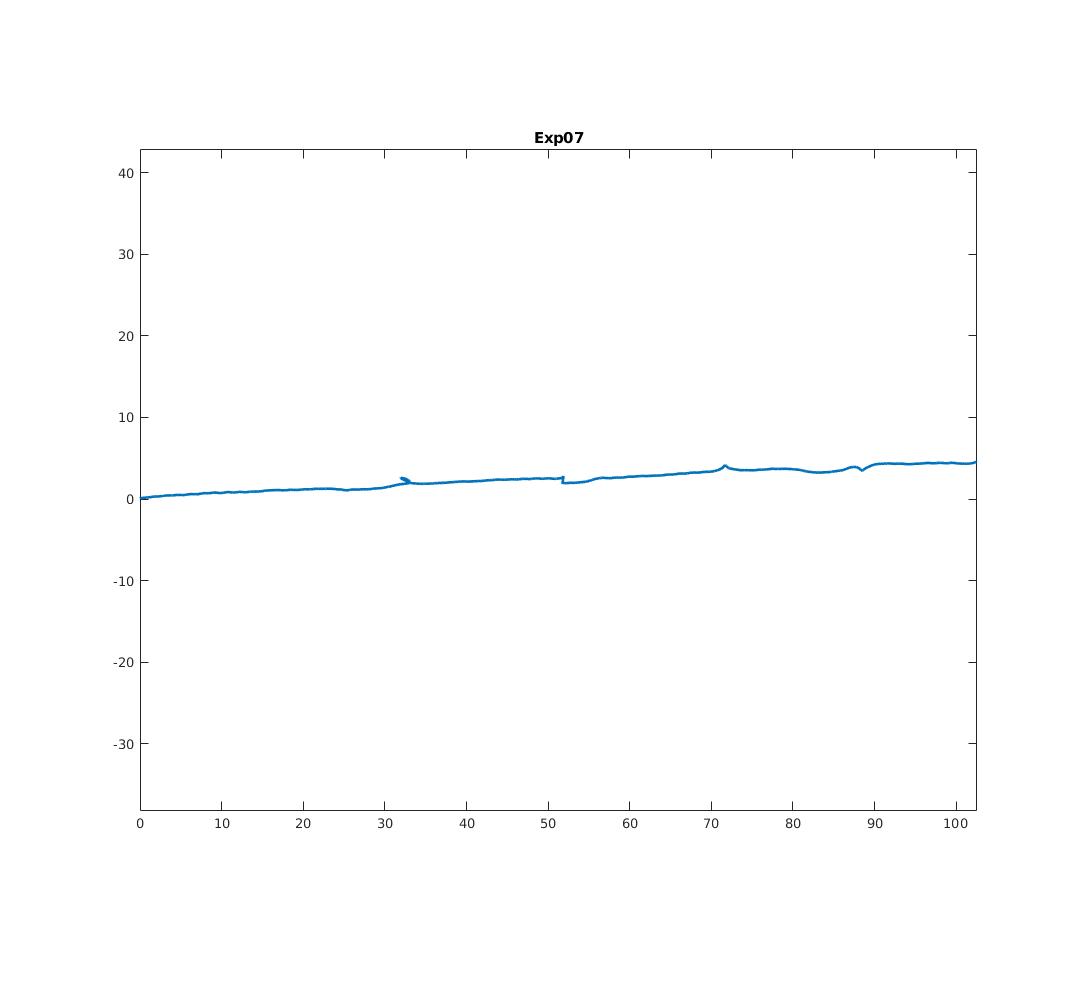

| Exp07 Long Corridor |

X

|

X

|

Medium | 11 GB |

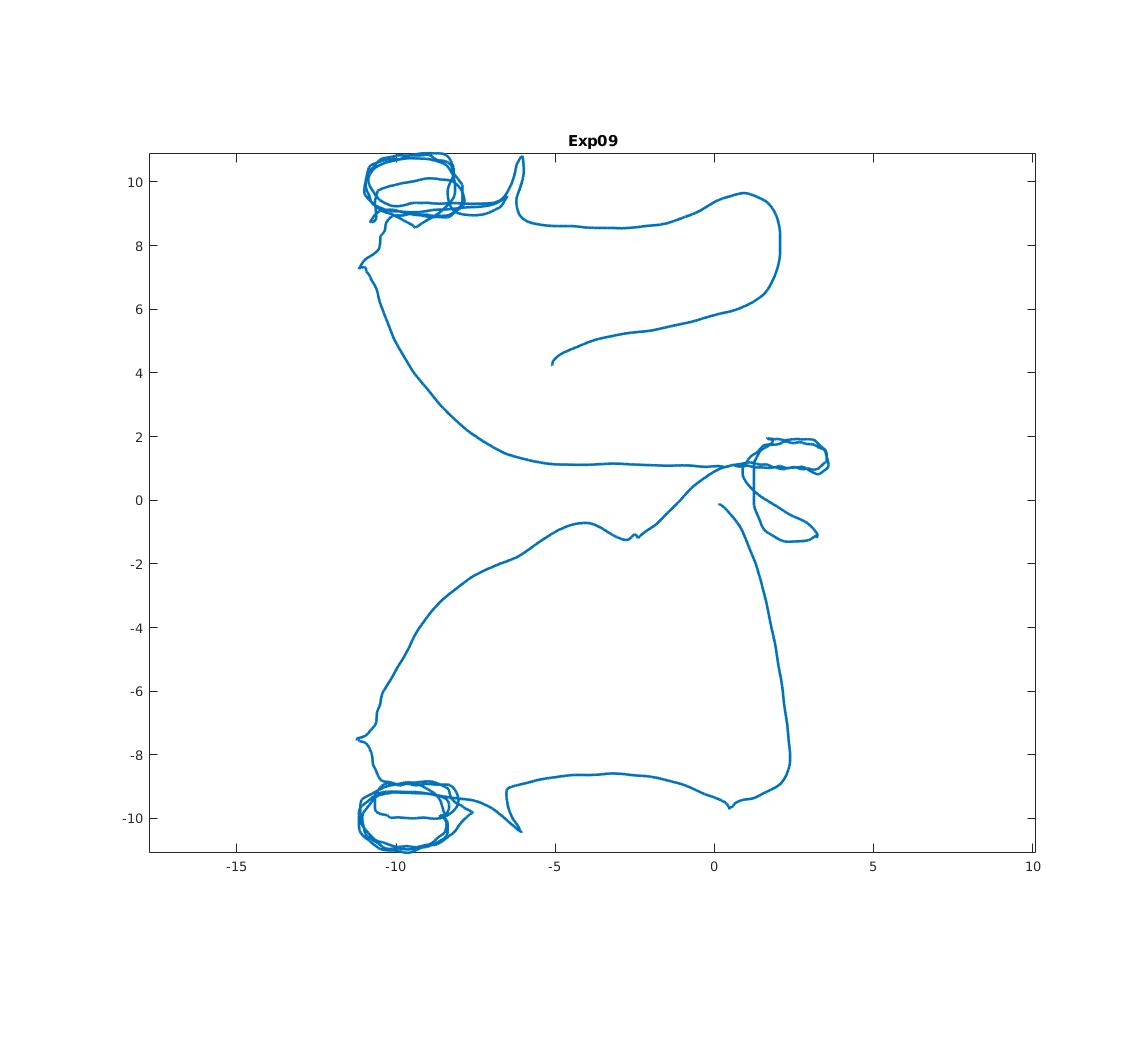

| Exp09 Cupola |

X

|

X

|

Hard | 33 GB |

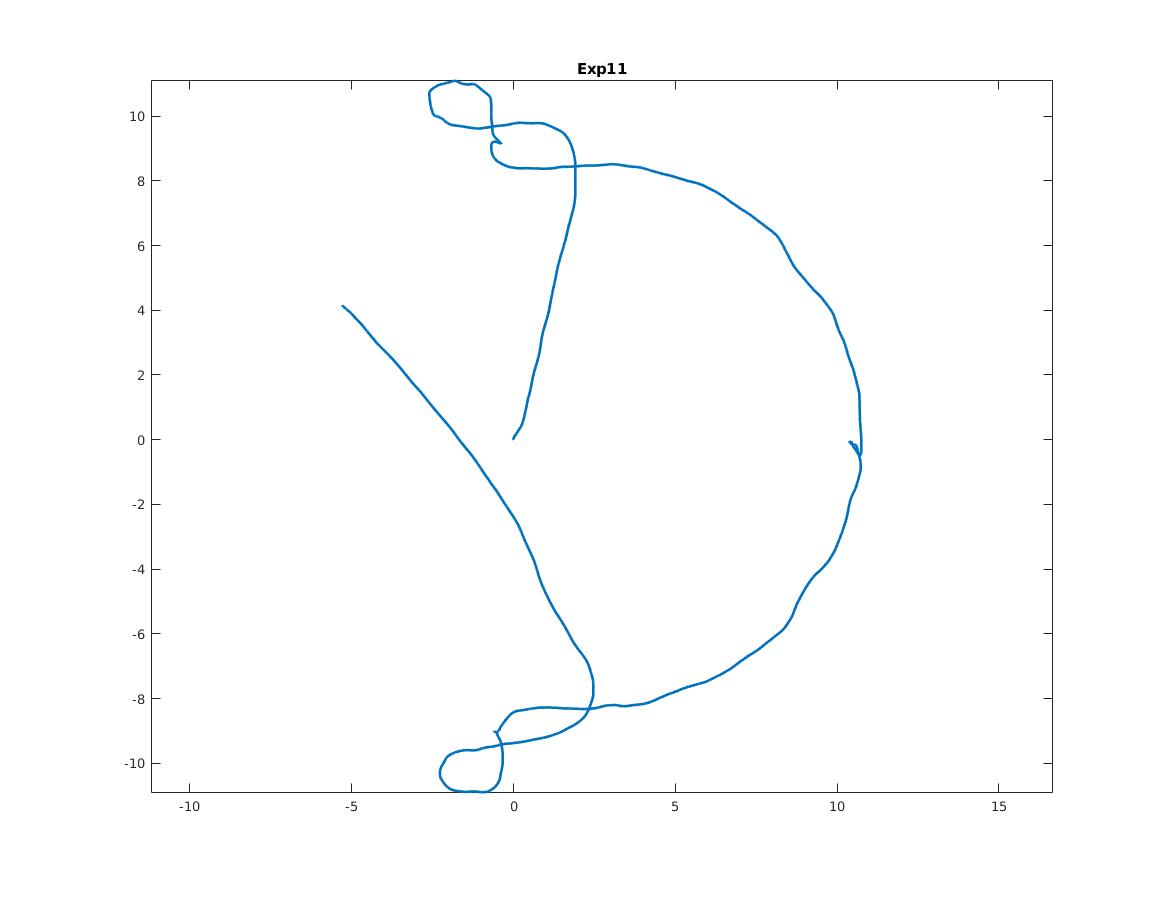

| Exp11 Lower Gallery |

X

|

X

|

Medium | 12 GB |

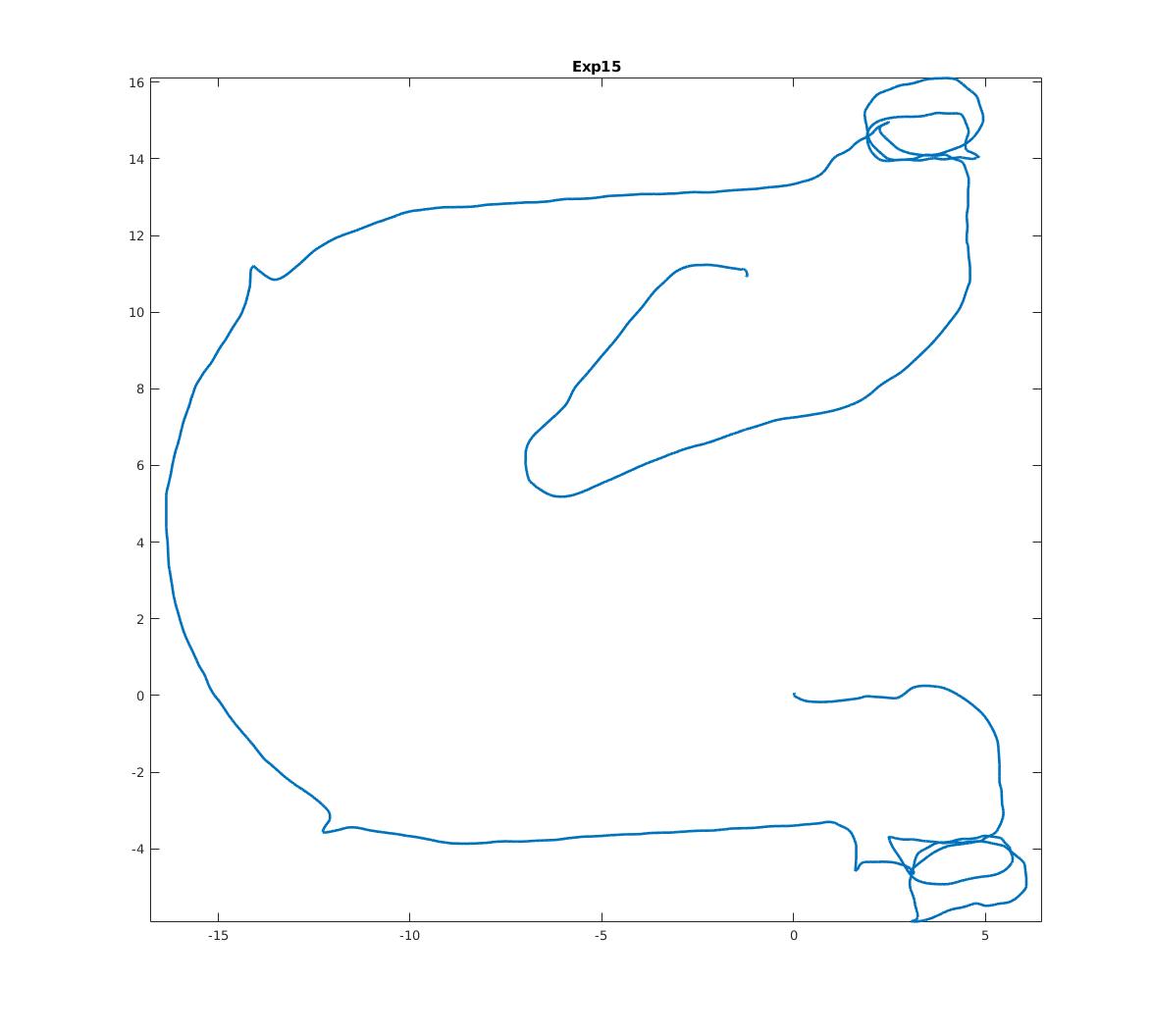

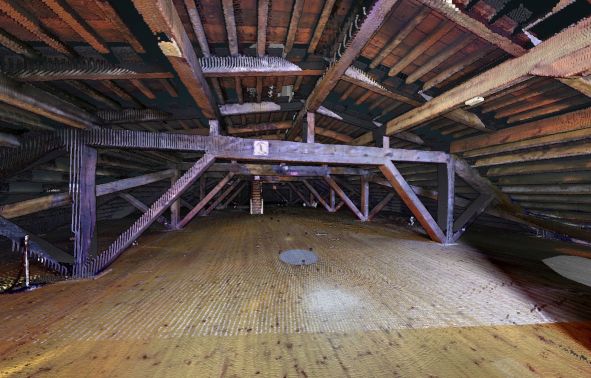

| Exp15 Attic to Upper Gallery |

X

|

X

|

Hard | 19 GB |

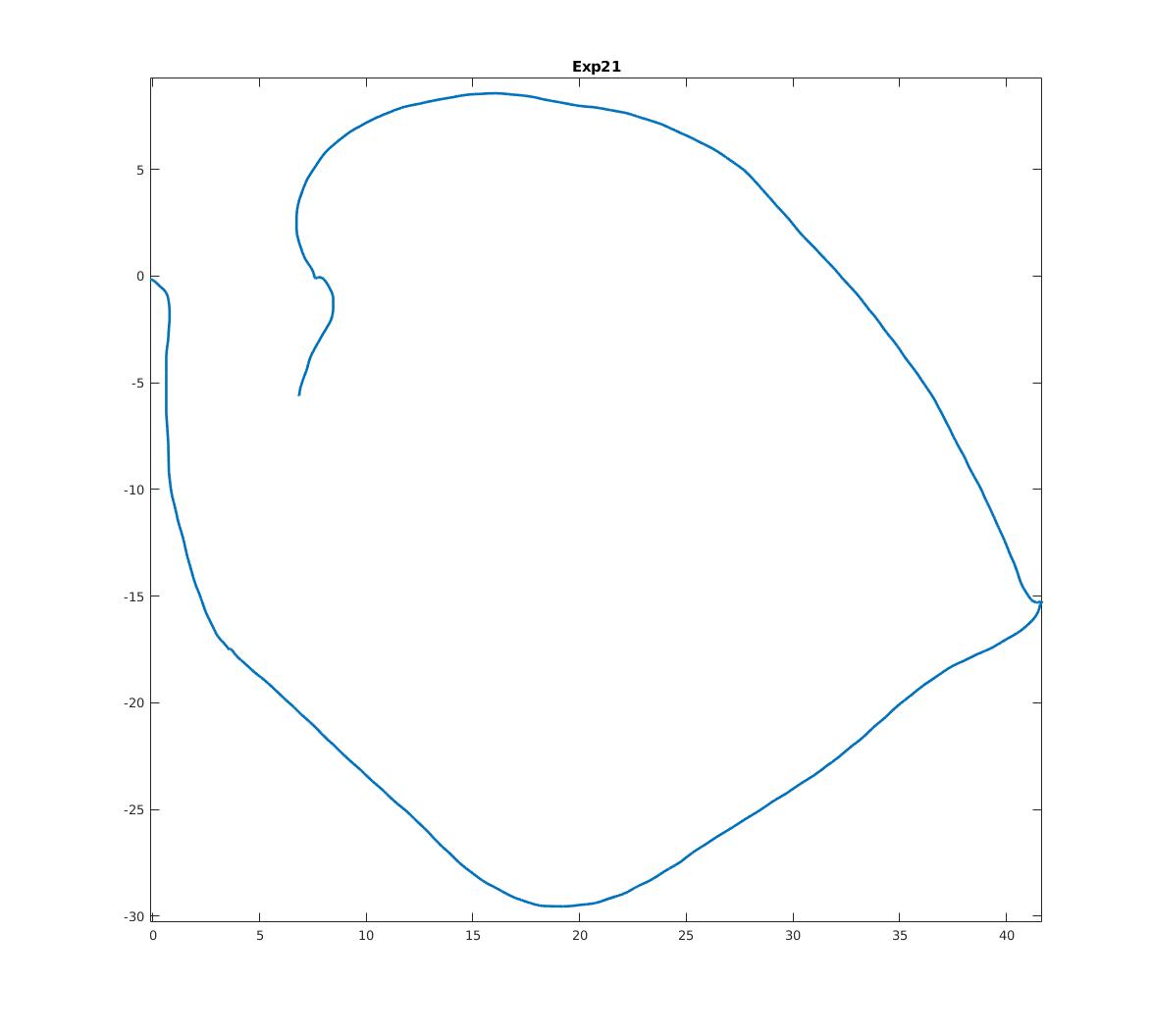

| Exp21 Outside Building |

X

|

X

|

Easy | 11 GB |

Additional Sequences

| Sequence | Difficulty | Ground truth* | Bag | |

|---|---|---|---|---|

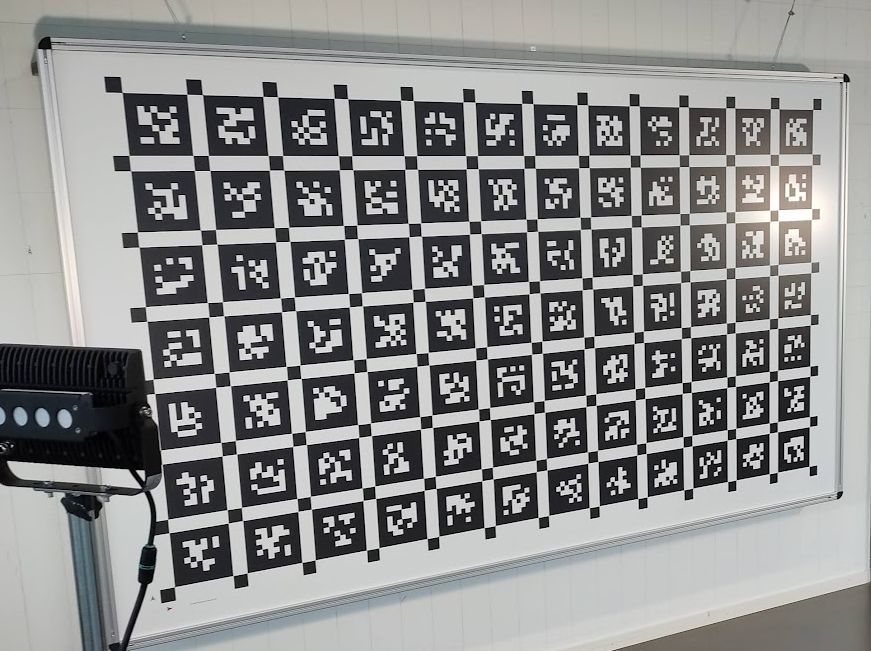

| Calibration Sequence (board description) |

X

|

7 GB | ||

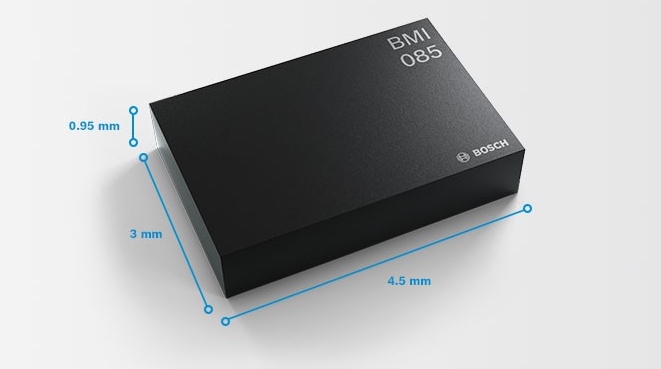

| IMU Noise Calibration |

X

|

150 MB | ||

| Exp04 Construction Upper Level 1 |

X

|

Easy | 6DOF | 10 GB |

| Exp05 Construction Upper Level 2 |

X

|

Easy | 6DOF | 10 GB |

| Exp06 Construction Upper Level 3 |

X

|

Medium | 6DOF | 12 GB |

| Exp10 Cupola 2 |

X

|

Hard | 3DOF | 27 GB |

| Exp14 Basement 2 |

X

|

Medium | 6DOF, 3DOF | 6 GB |

| Exp16 Attic to Upper Gallery 2 |

X

|

Hard | 6DOF, 3DOF | 15 GB |

| Exp18 Corridor Lower Gallery 2 |

X

|

Hard | 6DOF, 3DOF | 8 GB |

Exp23 The Sheldonian Slam Includes all sections and revisits the ground hall several times for loop closures |

X

|

Hard | 3DOF |

80 GB 0 1 2 |

HIGH-ACCURACY LASER SCANS

NEW! We release the full 3D Terrestrial Laser Scans of the Construction Site, as well as the Sheldonian Theatre in .e57 cloud format. This enables map-based algorithm debugging insights.

We used the Z+F Imager 5016, a survey grade terrestial laserscanner, and Scantra for the registration of the individual stations. The reported standard deviation for the registration (sigma_t) is below 1mm for all stations.HARDWARE

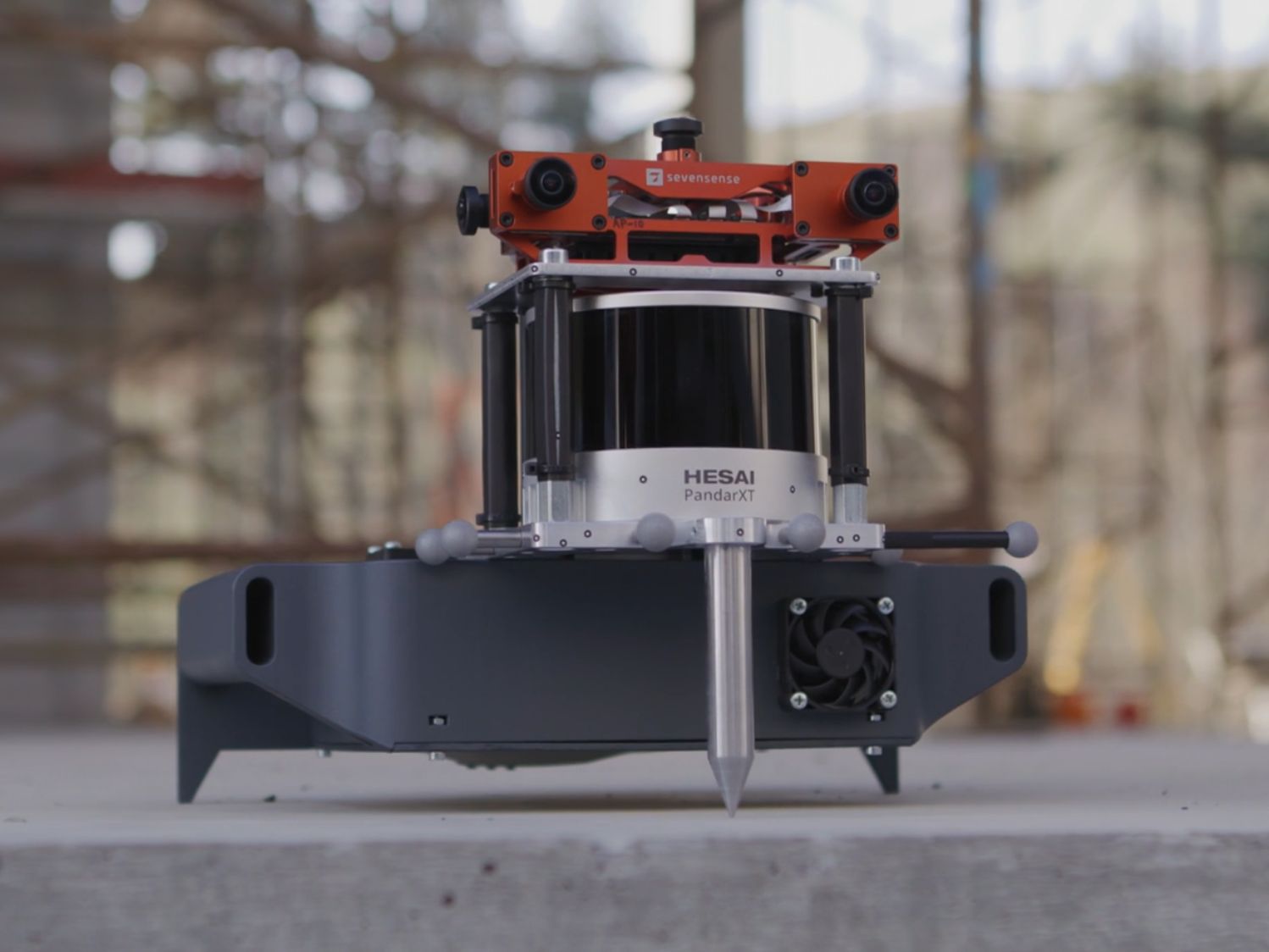

Our sensor suite consists of a Sevensense Alphasense Core camera head with 5x 0.4MP global shutter cameras, and a Hesai PandarXT-32.

The sensors are mounted rigidly on an aluminium platform for handheld operation. The synchronization between the cameras is done by a FPGA. The cameras and the LIDAR are synchronized via PTP. The time between all sensors is aligned to within 1 ms. An external steel-pin is attached to the setup

CALIBRATION

The calibration file in Kalibr-like format can be downloaded here. Alternatively you can use the provided calibration sequence to compute your own calibration or use the CAD model as initial guess. The yaml-file for the calibration board can be downloaded here. The CAD Models can be downloaded as STP or STL. Datasheets for the sensors:

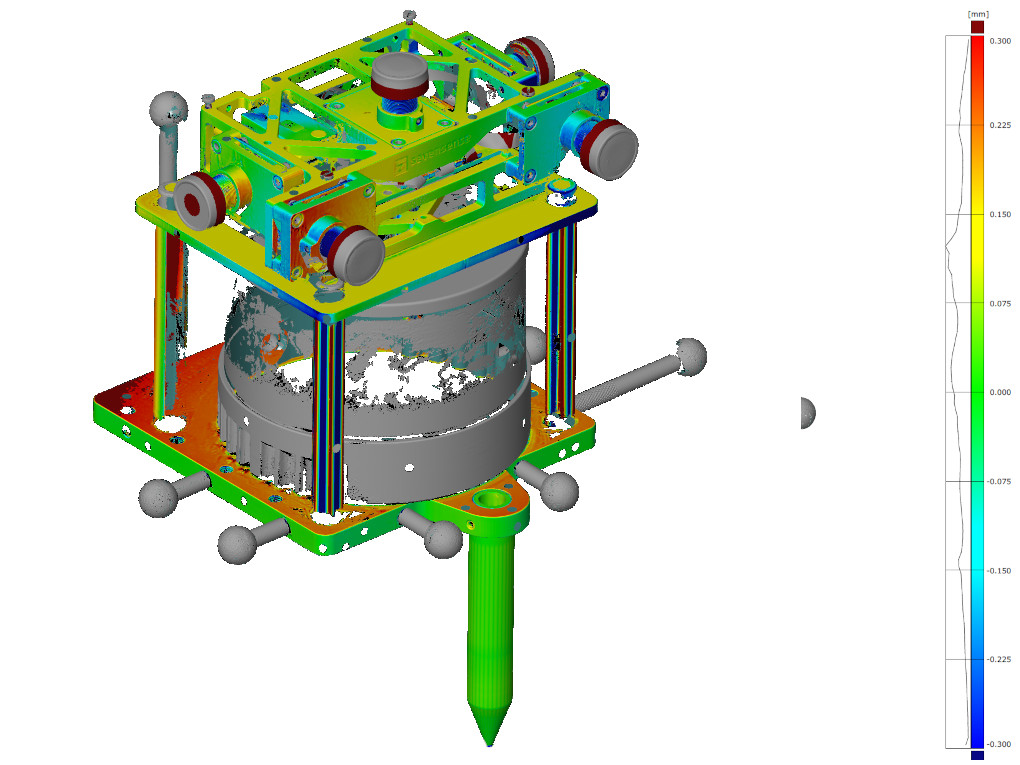

Additionally we provide a 3D model of the sensor which has be generated by the GOM Atos Q Scanner (accuracy in micrometer range).EVALUATION

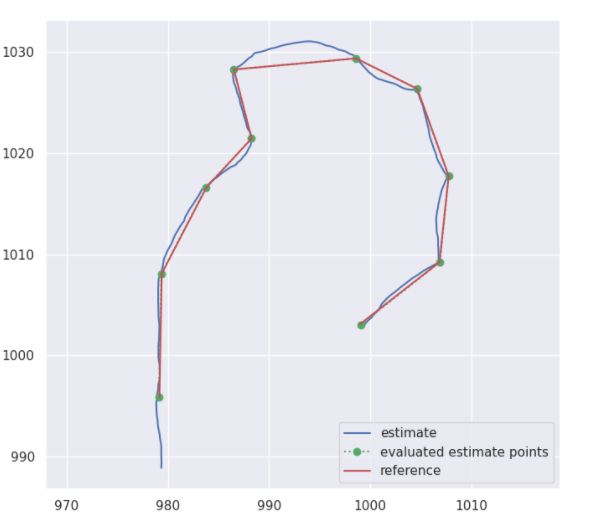

The submission will be ranked based on the completeness of the trajectory as well as on the position accuracy (ATE).

The score is based on the ATE of individual points on the trajectory. Depending on the error between 10 and 0 points are added to your final score. This way also incomplete trajectories can be included in the evaluation. You always can submit your current results and receive an accuracy report using our submission system.

PUBLICATION

When using this work in an academic context, please cite the following publication:

@ARTICLE{9968057,

author={Zhang, Lintong and Helmberger, Michael and Fu, Lanke Frank Tarimo and Wisth, David and Camurri,

Marco and Scaramuzza, Davide and Fallon, Maurice},

journal={IEEE Robotics and Automation Letters},

title={Hilti-Oxford Dataset: A Millimeter-Accurate Benchmark for Simultaneous Localization and Mapping},

year={2023},

volume={8},

number={1},

pages={408-415},

doi={10.1109/LRA.2022.3226077}}