The 2023 Dataset

The latest iteration of our benchmark is based on data from multiple active construction site environments, across multiple sessions and platforms. In addition to the handheld prototype from last year, we introduce datasets from a tracked robot platform.

This year's challenge extends evaluation to multi-session robustly operate across locations and platforms to accurately aggregate map information for each construction site from multiple SLAM runs. Options exist for both single and multi-session SLAM submissions and shall feature separate leaderboards.

Challenge Sequences

Each location is a multisession SLAM group with overlap across sequences. However each trajectory can also be evaluated as a single individual session.

| Location | Prototype | Sequence description | Bag |

|---|---|---|---|

| Site 1 | Handheld | Floor 0 | 22.0 GB |

| Site 1 | Handheld | Floor 1 | 17.9 GB |

| Site 1 | Handheld | Floor 2 | 18.3 GB |

| Site 1 | Handheld | Underground | 31.9 GB |

| Site 1 | Handheld | Stairs | 17.1GB |

| Site 2 | Robot | Parking 3x floors down | 63.3 GB |

| Site 2 | Robot | Floor 1 Large room | 27.7 GB |

| Site 2 | Robot | Floor 2 Large room - dark | 32.5 GB |

| Site 3 | Handheld | Underground 1 | 9.7 GB |

| Site 3 | Handheld | Underground 2 | 14.6 GB |

| Site 3 | Handheld | Underground 3 | 18.7 GB |

| Site 3 | Handheld | Underground 4 | 10.6 GB |

For the leaderboard - submit only the challenge datasets. To gain higher accuracy at Site 2, use the corresponding additional sequences to perform multi-device SLAM! Please note that the additional sequences at Site 2 contain rapidly flashing lights in their video streams, which may affect sensitive viewers - discretion is advised.

We have identified a vulnerability in our scoring reports that could indirectly help estimate ground

truth, and has enabled some teams to falsify results. While we have techniques to exclude such

submissions from the competition leaderboard, we wish to avoid incorrectly penalizing teams that perform

well legitimately. Hence we released an additional dataset location (Site 3) with 4 hand-held sequences,

which will be scored without in-depth analysis plots.

Scoring update: We have doubled Site 3's per trajectory score, with a possible maximum of 200

points each. Hence Site 3 contributes to 50% of a submission's overall score, and Sites 1 and 2

contribute to a combined 50%. While submissions prior to the release of Site 3 remain valid, the max

achievable score with Site 3 included has increased from 800 to 1600.

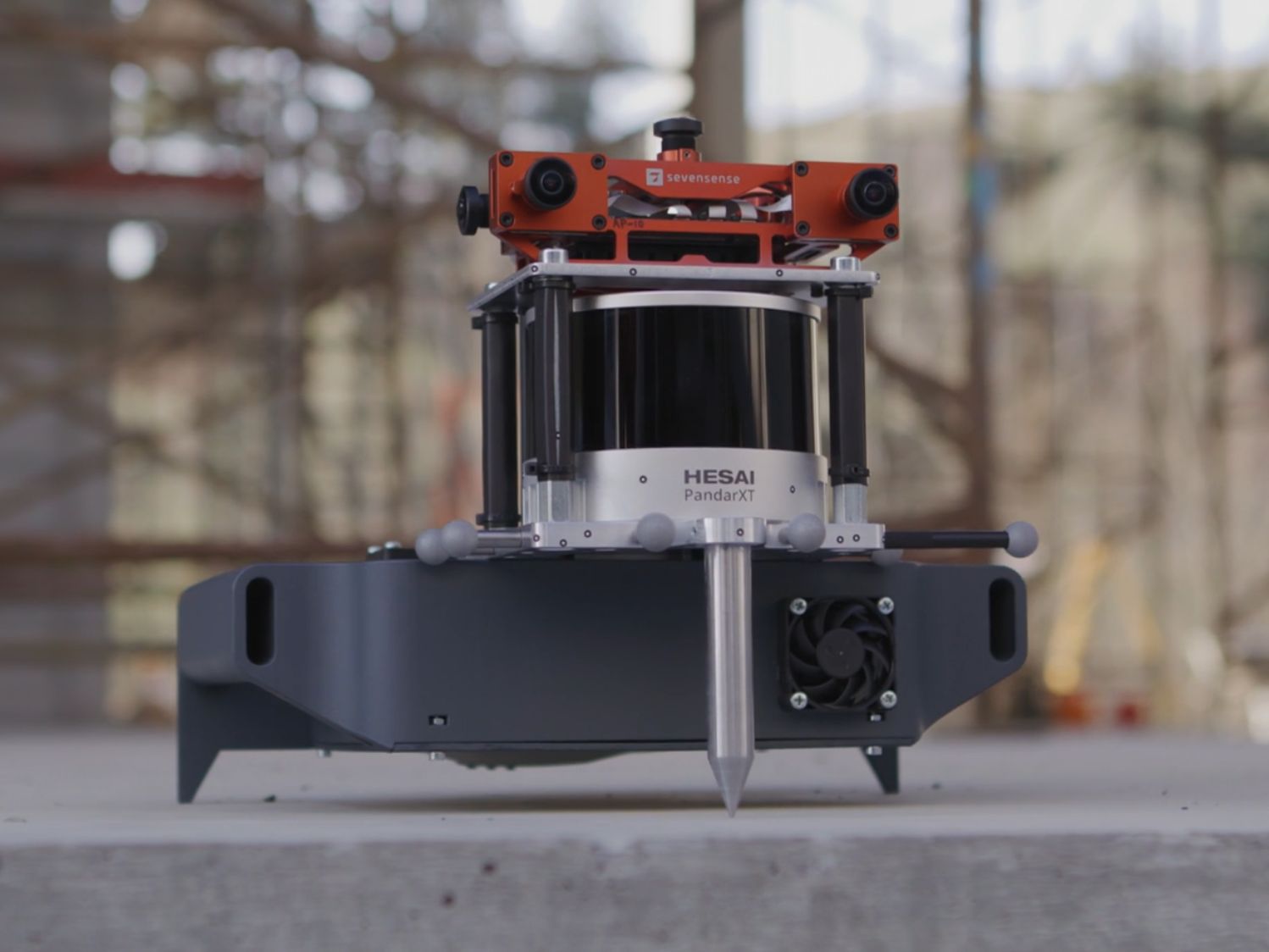

HANDHELD SENSOR SUITE

Our sensor suite consists of a Sevensense Alphasense Core camera head with 5x 0.4MP global shutter cameras, and a Hesai PandarXT-32.

The sensors are mounted rigidly on an aluminium platform for handheld operation. The synchronization between the cameras is done by a FPGA. The cameras and the LIDAR are synchronized via PTP. The time between all sensors is aligned to within 1 ms. An external steel-pin is attached to the setup.

ROBOT SENSOR SUITE

The new robot-mounted sensor suite consists of a Robosense BPearl hemisphere lidar, an Xsens MTi-670 IMU and 4x OAK-D cameras. The sensors are mounted on a rigid frame for a drilling robot platform, drawing inspiration from Hilti's iconic Jaibot.

The sensors are synchronized by means of a combination of PTP and hardware triggering. The IMU and LiDAR clocks are aligned to within 1ms, whilst the IMU and camera clocks are within 2ms.

EVALUATION

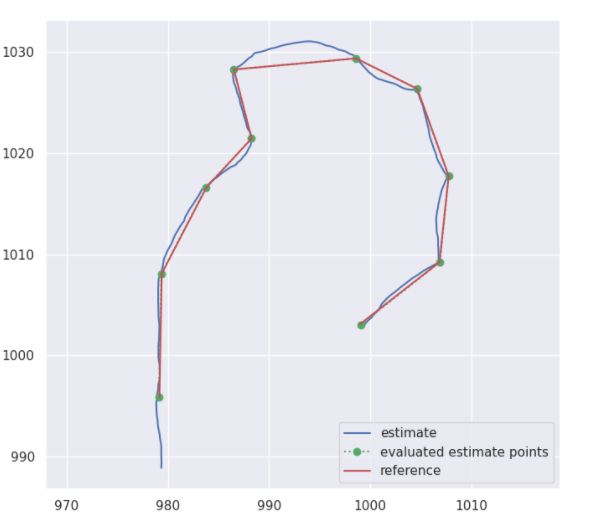

The submission will be ranked based on the completeness of the trajectory as well as on the position accuracy (ATE). Options exist for both single and multi-session SLAM submissions and shall feature separate leaderboards.

The score is based on the ATE of individual points on the trajectory. Depending on the error between 20 and 0 points are added to your final score. Hence incomplete trajectories can be included in the evaluation. Use our submission system to evaluate your SLAM solution. For multi-session SLAM submissions, all trajectories from a site are expected to be in a unified coordinate reference frame - independent of which prototype it was collected from.

Robotics and Perception Group, University of Zürich

Our mission is to research the fundamental challenges of robotics and computer vision that will benefit all of humanity. Our key interest is to develop autonomous machines that can navigate all by themselves using only onboard cameras and computation, without relying on external infrastructure, such as GPS or position tracking systems, nor off-board computing.

Confida Holding

Active since 1964, the CONFIDA Group is today one of the leading companies for construction and real estate services, tax and management consulting, accounting and auditing in Liechtenstein, and has a workforce of ~70. As a tradition-conscious company, the CONFIDA Group deepens and maintains the trust placed in it in its actions and services and meets the challenges of changing times.

Gebr. Hilti AG

Active since 1876, Gebr. Hilti AG is a general contractor operating in all areas related to building construction, civil engineering, road construction, and have continuously optimized and extended their range of services. They currently employ 204 employees.